Once upon a time, the infamous advertising industry pundit known as the Ad Mad Dude attempted to expose pop duo Strings of plagiarising the music used in two songs that were published on Velo Sound Station.

Ripping off the harmonic material for The Dark Side by Muse for the song “Pyaar Ka Rog” by Strings and subsequently ripping off the melodic and rhythmic material for “Haven’t Met You Yet” by Michael Bublé for the song “Mere Dil Ne” by Sara Haider & Uzair Jaswal, the Ad Mad Dude edited videos showing both songs followed by their poorly mimicked versions.

Upon smashing the publish button on both Facebook and YouTube, the Ad Mad Dude was met by messages that the content had been blocked from being uploaded due to copyright issues. The technology which detects copyrighted visuals and audio is known as Content ID on YouTube, while Facebook uses its own digital fingerprinting system.

Both companies have in place machine learning algorithms that flag advertisers when they attempt to upload videos or photos – in the form of ads – that do not line up with the rules of their specific platform. The point is if these companies have technology in place to detect and unpublish content that is detected to be abusive, copyright infringement, and goes against fixed advertising formats, the technology can be reformatted to blacklist keywords, content, and videos that stand in the way of peace and stability.

The decision-makers at the PTA need to establish a liaison office with the public affairs teams of Facebook, Google, Twitter, Bytedance, and many more social & digital media app companies in order to map out policies of working together as well as scenario planning on how specific content will be curtailed for the public good.

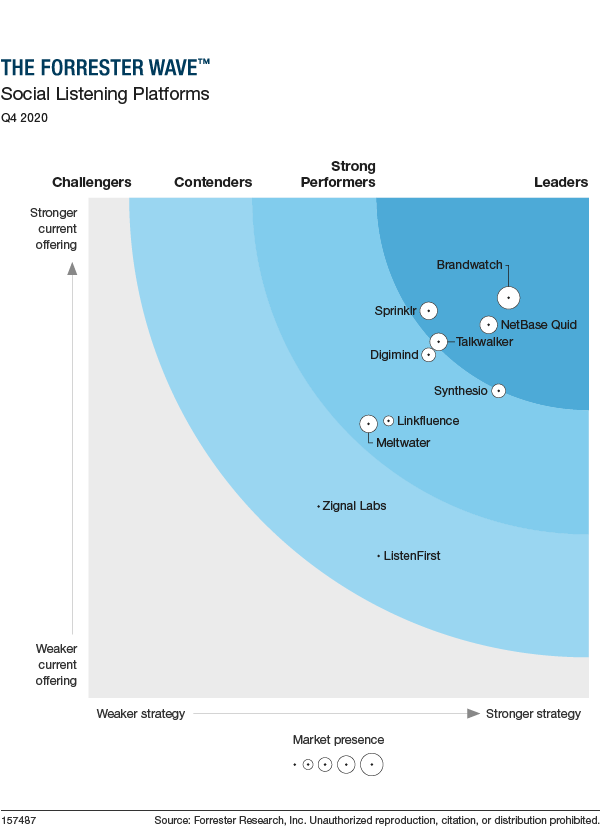

Had this been in place, on Friday the PTA need only use social listening tools – such as Brandwatch, Digimind, Linkfluence, ListenFirst, and Meltwater – to identify the accounts that are publishing or sharing the content deemed inappropriate. These accounts would be placed on a blacklist and shared with the public affairs liaison of the technology platform, which is then expected to either block or ban the account altogether.

With a Privacy Sandbox model, if the aforementioned technology companies interface with the PTA through a collective interest group – which they should – the metadata on the blacklisted accounts can be used to determine what other social media accounts they have and block or ban those too.

The PTA can then use predictive analytics and machine learning (PAML) to identify accounts that will be for the extremist narrative that they want to be shelved, sharing suspicions with the collective interest group in real-time for the subsequent block or ban.

While suppression is ongoing, they also need to enhance the reach of key opinion leaders that are sharing the ground realities that have been vetted. In doing the above, the PTA need not rob the entire nation of essential services just to quell the agenda of a few sociopaths.

And what about live videos? In 2018, researchers from the University of Notre Dame developed a crowdsourcing-based copyright infringement detection (CCID) scheme by exploring a rich set of valuable clues from live chat messages.

“Our solution is motivated by the observation that the live chat messages from the online audience of a video could reveal important information of copyright infringement,” said the researchers. “If a video stream is copyright-infringing, the audience sometimes colludes with the streamers by reminding them to change the title of the stream to bypass the platform’s detection system. However, such colluding behavior actually serves as a “signal” that the stream has copyright issues.”

The researchers add that the semantics powering the signal can be applied to cut off propaganda and hate speech as well and acts of violence or vandalism aired live, using comments from viewers to feed the scheme. The meta-data of the videos such as view counts and the number of likes/dislikes can help in early detection as well.

In 2020, researchers from the Qatar Computing Research Institute found that the XGBoost classification algorithm is an effective model for online hate detection using multi-platform data. The researchers generous made their code publicly available for application in real software systems as well as for further development by online hate researchers. A simple Google search can help decision-makers at the PTA find many more workarounds.

For bureaucrats who value the ease of doing business index and subsequently the rate at which FDI flows into Pakistan, this approach – which is based on existing technology and frameworks that take roughly a week to set up – signals to the businesses worldwide that the regulator for telecommunication in Pakistan is led by technocrats who are up to speed on how artificial intelligence works, instead of the current perception that digital immigrants are at the wheel.

It gives more app publishers the confidence to formally approach Pakistan as a potential market for doing business, reassured that the regulator has taken proactive intelligent steps in place to foster coordination and nearly eliminate the need for bans due to user-generated content.

There is an issue. This is assuming that Facebook, Google will be happy to comply with PTA. Big Tech doesn’t necessarily comply with countries’ orders if they expect loss in revenue and don’t have incentive to do so. And if PTA tries to implement something itself on platforms hosted by big tech, they will simple limit services which only exacerbates the issue as plentiful Pakistanis will take to the streets insisting that PTA back down. This was a similar case in China, except China was able to develop its own search engine, social media unlike Pakistan. If anything, it is time people of Pakistan should develop their own search engines and social media apps, but I guess that is not going to be happening anytime soon.